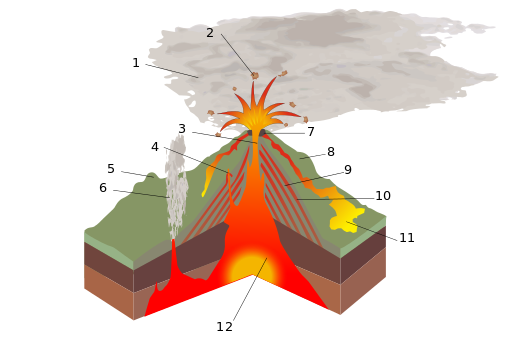

Diagram of a volcanic eruption. Source: Wikimedia. This file is licensed under the Creative Commons Attribution-Share Alike 4.0 International license.

What’s new?

In the 22 May 2021 issue of New Scientist, Michael Roberts of the Cambridge Image Analysis group at the University of Cambridge reported on a study of attempts to use machine-learning (an artificial intelligence technique) to diagnose COVID-19 and to predict how patients would fare with the disease. He and his colleagues examined over 300 papers published between 1 January and 3 October 2020 and found that none had produced a useful tool.

What does it mean?

I have written about artificial intelligence (AI) before in this blog. On 15 May 2020, I noted that the inability of many artificial intelligence based techniques to explain in human terms how they reached a conclusion limits their usefulness in circumstances where an explanation is needed as part of the decision making process. On 13 June 2020 I wrote that the computer program Watson had been a great success on the TV game show Jeopardy but had failed to be useful in the medical field. On 2 Jan 2021, I cautioned against the hype concerning AI, in this case regarding a study meant to increase our understanding of whale calls. In my recent (8 May 2021) review of my year of blogging, I noted that AI was the one technology about which I was not generally positive. In this blog post, I am again going to caution about AI hype and about the need for a model that humans can understand.

I am heavily influenced in my opinion about AI by the results of a PhD dissertation I advised at Ohio State in 2002, titled “Quantitative measurement of loyalty under principal-agent relationship.” Keiko (Kay) Yamakawa attempted to detect disloyal insurance agents for a large insurance company, that is, insurance agents who issued policies from several companies and whose behavior indicated they may be failing to recommend products from this particular insurance company. Dr Yamakawa had little success with AI approaches (such as a hidden Markov model) but found that a more traditional approach using control charts was successful. The former approach, hidden Markov models, is based on a search for a statistical model that reproduces the patterns in the data and, even if successful, is unable to be used to generate an explanation of what it did. The latter approach, control charts, is based on classical hypothesis testing (with all its benefits and faults) to detect if a process that has been behaving with statistical regularity has moved out of that state of statistical control; the method includes charts that visually display an explanation of the result. Indeed I was dismayed that it took Kay and me so long to decide to try control charts since the formulation of the problem was clearly the detection of a process that had moved out of control.

Nineteen years later AI techniques and computer capabilities for handling huge data bases have advanced greatly, but I believe that the general findings of that dissertation still apply. AI techniques are mesmerizing in their promise to detect patterns and apply them to practical situations without the need to understand the domain. Other techniques require more domain knowledge and more understanding of the particular problem being attacked. A tension always exists between those who have powerful techniques and seek to apply them in disparate areas, sometimes in areas where they have little domain knowledge, and those who have the domain knowledge and watch the masters of technique flounder.

Paul Lingenfelter cites political scientist Donald Stokes as describing the statistical technique of factor analysis as “seizing your data by the throat and demanding: Speak to me!” Researchers must always guard against uncovering spurious patterns that occur just by chance (for example, by reserving some data to test findings). Increasing access to big data sets and computing power and increasing use of sophisticated data analysis techniques have created many successes but have also created failures such as this one in COVID diagnosis.

In 1947, economist Tjallings Koopmans wrote a cautionary article titled “Measurement Without Theory.” In reviewing a book on business cycles, Koopmans lamented the authors’ attempt to measure and analyze data without the use of theory. Koopmans wrote:

Measurable effects of economic actions are scrutinized, to all appearance, in almost complete detachment from any knowledge we may have of the motives of such actions. The movements of economic variables are studied as if they were the eruptions of a mysterious volcano whose boiling caldron can never be penetrated. There is no explicit discussion at all of the problem of prediction, its possibilities and limitations, with or without structural change, although surely the history of the volcano is important primarily as a key to its future activities. There is no discussion whatever as to what bearing the methods used, and the provisional results reached, may have on questions of economic policy.

Almost 75 years later, those sentences still bite.

What does it mean for you?

In my 11 July 2020 blog on the topic of models, I cited the quote “the purpose of modeling is insight, not numbers.” In creating models of real world systems and in analyzing data, I urge you to focus on understanding the system, not just on finding empirical patterns. A black box that takes input and gives output is less useful in the long run than a transparent model that promotes understanding. You should consciously be building models – mental or mathematical – of your organization and the environment in which it functions.

Where can you learn more?

Koopmans’s article was published in The Review of Economic Statistics, volume 29, number 3, August 1947, pages 161-172.

This work is licensed under a Creative Commons Attribution 4.0 International License.

And speaking of AI and volcanoes, I cannot think of a more spectacular (and hilarious) example than Microsoft’s failed AI experiment, “Tay”.

Tay (Thinking About You) was an AI “bot” designed to think and talk like a 19-to-25 year-old woman. It was expected to pass the Touring Test with ease. Microsoft gave Tay a live Twitter account (Big Mistake #1) and free reign (Big Mistake #2).

Within 16 hours, Tay had became an anti-Semitic, bigoted, Holocaust-denying, feminist-bashing, Trump supporter.

The AI experts at Microsoft had some ‘splainin to do.

One thing is clear: Tay did indeed learn (and she learned very quickly).

You cannot possibly make a judgement about Tay without getting into some very contentious political topics–and then making value judgements about them. And it not just a simple matter of GIGO, either (as everyone claims). As we move closer to more realistic AI, we will, at some point, simply have to abandon the “blank slate” theory of human nature.

More here:

https://infogalactic.com/info/Tay_(bot)

Of course, Microsoft has an excuse:

https://blogs.microsoft.com/blog/2016/03/25/learning-tays-introduction/